Natural Language Processing

Multilingual NLP

Recently, multilingual NLP models has gained attention in the NLP field. One of the gols in multilingual NLP is to develop a single model that can handle multiple languages. To achive this gola, we are working on themes such as developing models that take advantage of commonalities among languages and analyzing multilingual models.

Download PosterUsing Semantic Similarity as Reward for Reinforcement Learning in Sentence Generation

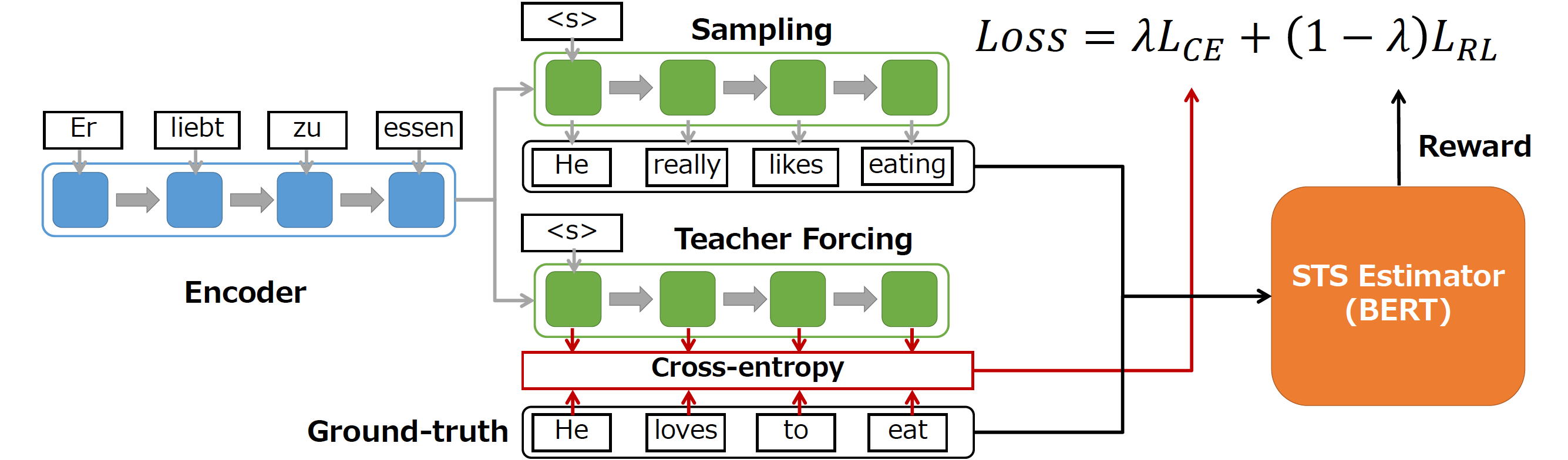

Cross entropy loss only evaluates sentences on the token level and is unable to handle synonyms or changes in sentence structure. For this reason, we propose to evaluate output sentences with more flexible criteria such as their Semantic Textual Similarity (STS) with ground truth sentences, then use Reinforcement Learning (RL) with estimated STS scores as reward.

Download Poster